YouTube quietly rolled out a policy update this week that, on November 17, will start restricting a broader category of gaming videos depicting realistic violence, fundamentally changing how millions of viewers access some of the platform's most popular content.

The new rules target videos showing realistic human characters engaged in mass violence against non-combatants or scenes involving torture within games. Once flagged, these videos become inaccessible to anyone under 18 and to users not signed in to a YouTube account.

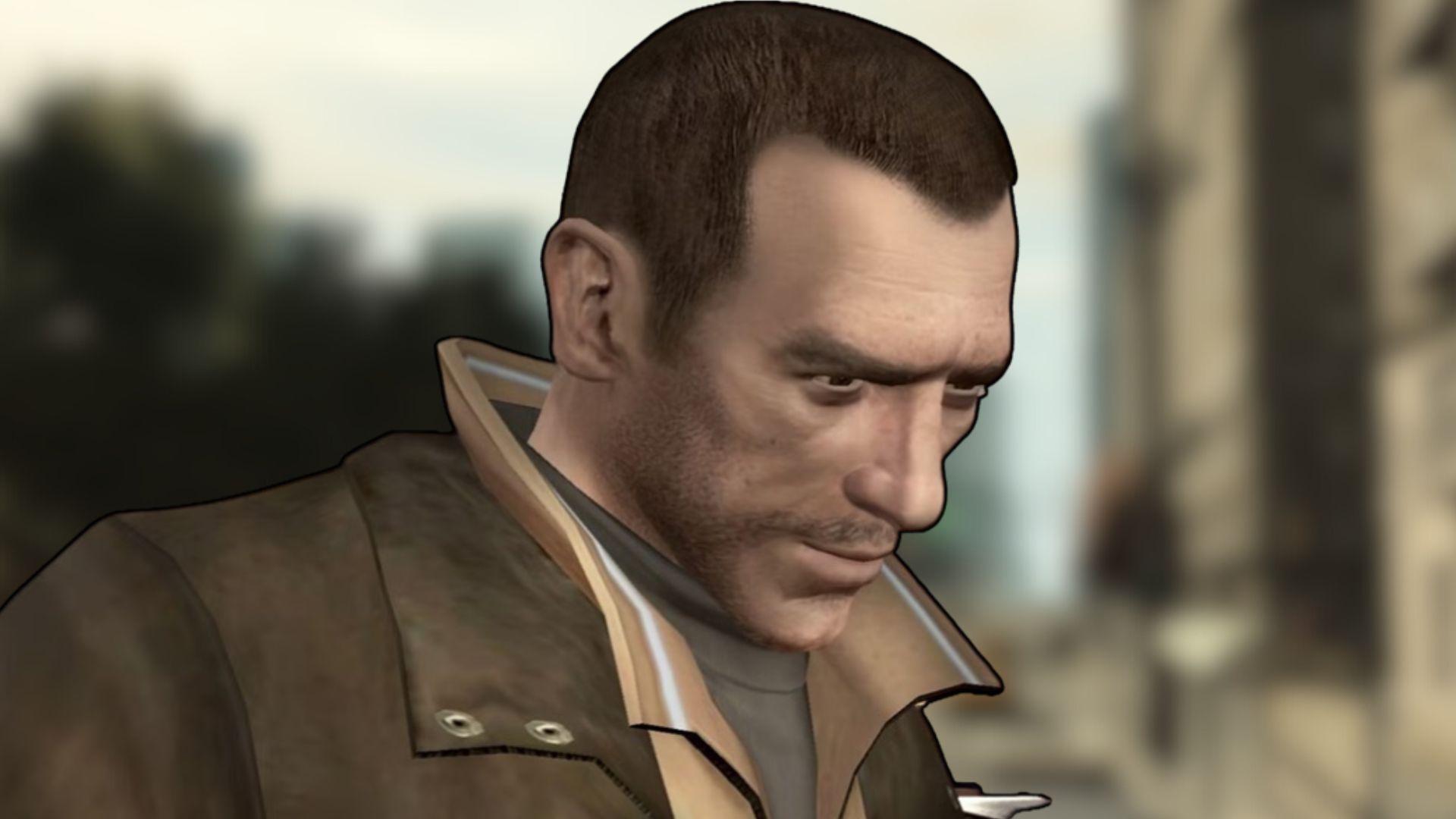

TLDR; anyone under 18 might find it difficult, if not impossible, to watch Grand Theft Auto 6 videos on YouTube as we inch closer to the game’s release on May 26, 2026.

YouTube clarified that several factors will determine whether content gets restricted. The length of violent scenes matters, as does how prominently the violence features in the video. Creators do have options to avoid restrictions by blurring imagery or adjusting how scenes are framed, but these workarounds require additional editing work that could slow down production schedules.

Grand Theft Auto has always centered on giving players freedom to engage in criminal activity and violence within its open worlds. Players routinely upload clips of themselves causing chaos, evading police after acquiring maximum wanted levels, or participating in the kind of mayhem that defines the series.

Under the new policy, much of that content could end up age-restricted. The game features photorealistic graphics that make its human characters look more lifelike than ever before. When combined with gameplay that often involves violence against civilians and law enforcement, GTA videos feel exactly like what the new YouTube policy was made for.

The update could push channels to change their content strategies, which feels like bad timing considering what Rockstar Games reportedly has planned for the upcoming sixthquel. Some may move toward less graphic games or focus more on commentary rather than raw gameplay footage.

Others might invest time in editing tools to blur violence or crop scenes in ways that satisfy the new requirements. Either approach means extra work and potentially compromised content quality.

For years, YouTube treated video game violence somewhat differently from other content. The understanding was that dramatized virtual violence remained acceptable for wider audiences as long as the context made clear it was from a game. That implicit agreement is now ending.

With the update, YouTube is essentially saying that as games become more photorealistic, the distinction between virtual and real violence matters less from a moderation standpoint.

Between regulators taxing violent video games and parents increasingly questioning whether moderation and recommendation systems adequately protect younger users, especially when dramatized virtual violence becomes visually indistinguishable from real violence. YouTube’s stance is to stay at least one step ahead of any regulatory pressure.

It will be interesting to see whether YouTube’s new guidelines set a precedent for others to follow, or if competing platforms will use this as an opportunity to steal content creators and audiences who will inevitably leave Alphabet’s streaming arm.